a. Introduction

Since 2008 the School of Mathematics and Statistics has incorporated computer-based assessments (CBAs) into its summative, continuous assessment of undergraduate courses, alongside conventional written assignments. These CBAs present mathematical questions, which usually feature equations with randomized coefficients, and then receive and assess a user-input answer, which may be in the form of a numerical or algebraic expression. Feedback in the form of a model solution is then provided to the student.

From 2006 until the last academic year (2011/2012), the School employed the commercial i-assess CBA software. However, this year (2012/2013) the School rolled out a CBA package developed in-house, Numbas, to its stage 1 undergraduate cohort. This software offers greater control and flexibility than its predecessor to optimize student learning and assessment. As such, this was an opportune time to gather the first formal student feedback on CBAs within the School. This feedback, gathered from the stage 1 cohort over two consecutive years, would provide insight into the student experience and perception of CBAs, assess the introduction of the new Numbas package, and stimulate ideas for further improving this tool.

After an overview of CBAs in Section b and their role in mathematics pedagogy in Section c, their use in the School of Mathematics and Statistics is summarized in Section d. In Section e the gathering of feedback via questionnaire is outlined and the results presented. In Section f we proceed to analyze the results in terms of learning, student experience, and areas for further improvement. Finally, in Section g, some general conclusions are presented.

b. A Background to CBAs

Box 1: Capabilities of the current generation of mathematical CBA software.

- Questions can be posed with randomized parameters such that each realization of the question is numerically different.

- Model solutions can be presented for each specific set of parameters.

- Algebraic answers can be input by the user (often done via Latex commands), and often supported by a previewer for visual checking

- Judged mathematical entry (JME) is employed to assess the correctness of algebraic answers.

- Questions can be broken into several parts, with a different answer for each part.

- On top of algebraic/numerical answers, more rudimentary multiple-choice, true/false and matching questions are available.

- Automated entry of CBA mark into module mark database.

Computer-based assessment (CBA) is the use of a computer to present an exercise, receive responses from the student, collate outcomes/marks and present feedback [10]. Their use has grown rapidly in recent years, often as part of computer-based learning [3]. Possible question styles include multiple choice and true/false questions, multimedia-based questions, and algebraic and numerical “gap fill” questions. Merits of CBAs are that, once set up, they provides economical and efficient assessment, instant feedback to students, flexibility over location and timing, and impartial marking. But CBAs have many restrictions. Perhaps their over-riding limitation is their lack of intelligence capable of assessing methodology (rather CBAs simply assess a right or wrong answer). Other issues relating to CBAs are the high cost to set-up, difficulty in awarding of method marks, and a requirement for computer literacy [4].

In the early 1990s, CBAs were pioneered in university mathematics education through the CALM [6] and Mathwise computer-based learning projects [7]. At a similar time, commercial CBA software became available, e.g. the Question Mark Designer software [8]. These early platforms featured rudimentary question types such as multiple choice, true/false and input of real number answers. Motivated by the need to assess wider mathematical information, the facility to input and assess algebraic answers emerged by the mid 1990s via computer-algebra packages. First was Maple’s AIM system [5, 14], followed by, e.g. CalMath [8], STACK [12], Maple T. A. [13], WebWork [14], and i-assess [15]. This current generation of mathematics CBA suites share the same technical capabilities, summarized in Box 1.

c. Assessment and Learning Styles

It is well reported in pedagogic literature the tendency that students, while accomplished at routine exercises and possess vast subject-specific knowledge, are often unable to demonstrate a true understanding of the subject, for example, if presented with an unfamiliar but simple question [16, 17]. This indicates a dominance of surface learning and lower cognitive skills, rather than a deep understanding at a higher cognitive level. Mathematics students with a deeper understanding are reported to be more successful (see [11, 17] and references therein). In the interests of higher quality in education, a deep learning approach is therefore encouraged.

Since students are primarily motivated by obtaining high marks, assessment drives learning and should reflect the higher skills we covet. Bloom’s taxonomy provides a well-known general taxonomy of learning skill-sets [18]. Within mathematics it is possible to develop a more specific taxonomy of question types and skills, e.g. that proposed by Sangwin et al. [11]:

- Factual recall

- Carry out a routine calculation or algorithm

- Classify some mathematical object

- Interpret situation or answer

- Prove, show, justify – (general argument)

- Extend a concept

- Construct example/instance

- Criticize a fallacy

The required depth of understanding increases down the list in a hierarchical manner. Roughly speaking, we may identify levels 1-4 as the application of well-understood knowledge/routines in bounded, “seen” situations, i.e. surface approaches, and levels 5-8 involving higher skills and deeper learning, often based on open-ended, unseen question types. While all levels of skill can be assessed via written assignments (note that the works [11, 17] provide examples of mathematical questions at each level), it is pertinent to ask what skill levels can be assessed via CBA. Questions at levels 1-4 typically possess unique mathematical (numerical or algebraic) answers (e.g. “Specify the solution to the equation y’=sin(x)”) making them well-suited to assessment via computer. Questions at levels 5-8, however, do not typically feature unique answers and will feature an emphasis on methodology rather than final answer (e.g. “Prove that the theorem X holds”). This renders them unsuitable to automated assessment. However, in certain scenarios, it is possible to invoke the higher level skills through CBAs. For example, Sangwin et al demonstrated the ability of assessment of level 7 (construct example/instance) skills via CBA. For example, if the question is formulated in the form “Find a function which satisfies the properties X and Y”, the computer can numerically test whether the input answer satisfies the properties X and Y.

d. CBAs in the School of Mathematics and Statistics

i. CBA Strategy: Past and Present

CBAs were first introduced in the School in 2006 for summative, continuous assessment, alongside conventional written assignments, with the purpose of:

- Reducing the time and expense associated with assessing written assignments (with class sizes of up to 200 students).

- Focussing on standard, “seen” questions, similar to those encountered in lectures (written assignments remaining the domain of more challenging “unseen” types of questions).

- Supporting learning of core material by giving students extra practice of such questions.

To motivate students to undertake the CBAs, they were made summative, given half the mark of a written assignment.

CBAs are currently employed in all core stage 1 modules and many later modules. A typical 10-credit stage 1 module involves four CBAs and four written assignments, each worth 2.5% of the module mark[1]. Each CBA follows a two-week cycle. The CBA is in an open “practice mode” for one week, with model solutions available. For the second week “test mode” the student has one attempt at the CBA. The set of questions in the practice and test mode are the same apart from randomized parameters.

Up until this academic year, CBAs in the School were delivered using the i-assess package [15]. This academic year (2012/2013) began the transition to a new CBA software developed in-house, Numbas. We will next briefly describe some of the characteristics of these two CBA packages, which serve to motivate the new Numbas package.

ii. i-assess CBA Software

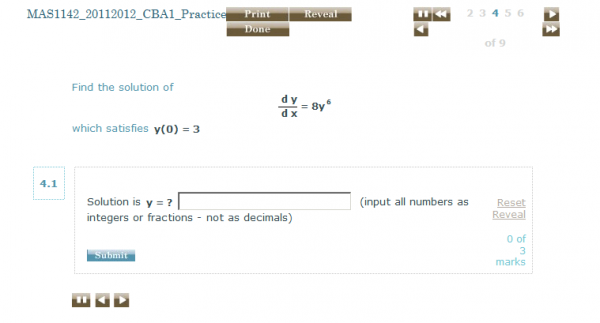

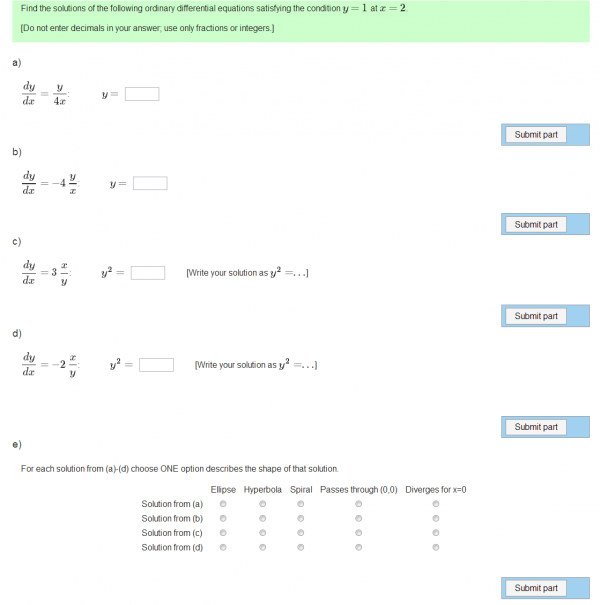

A typical CBA question within the commercial i-assess package is illustrated in Figure 1 (top). While i-assess features the standard capabilities in Box 1, its use at Newcastle revealed the limitations:

- A dated user interface and question/solution display.

- The user interface and question layout could not be edited.

- Some unfavourable quirks, e.g. logging out after each exam.

- Availability only through the university computing network.

- Instability of software, especially during high usage.

iii. Numbas CBA Software

Numbas is an open source CBA software, with the same capabilities as i-assess, but additionally:

- Improved user interface and navigation.

- Complete control of the front-end and back-end (within technological limits).

- It can be run via a web browser, VLE or local executable file (e.g. on USB or DVD).

A screenshot of a Numbas test is shown in Figure 1 (bottom).

e. Student Feedback on CBAs

i. Polling of Students via Questionnaire

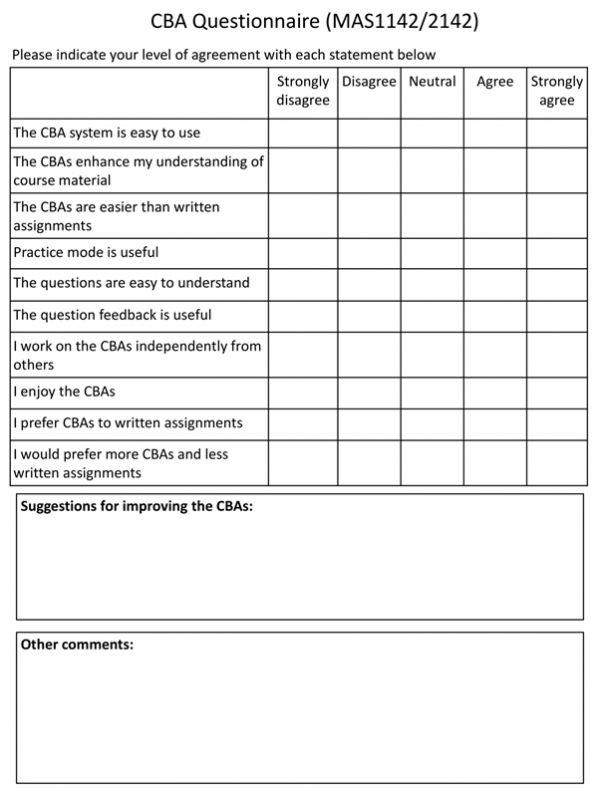

The CBA feedback was gathered by polling the stage 1 cohort (through the module MAS1142 Modelling with Differential Equations) via questionnaire over two consecutive years (the final year under i-assess and the first year under Numbas). Over the two years, the course had no significant structural or curriculum changes, and the student groups can be expected to have very similar abilities, allowing for a meaningful comparison. The questionnaire, shown in Appendix A, featured 10 Likert-type questions with the intention of probing the student perception of:

- The CBA user interface.

- The usefulness of the CBAs in supporting course material.

- The level of interest and difficulty of CBAs (in comparison to written assignments).

- The quality of the questions and feedback/model solutions.

- The manner in which students undertake the CBAs, e.g. group versus individual working.

Students were invited to write further comments, including suggestions for improvement.

ii. Student Feedback on CBAs (2011/2012)

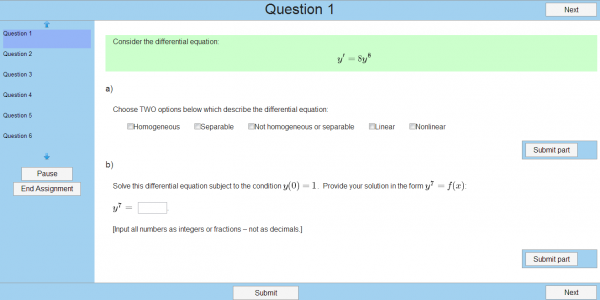

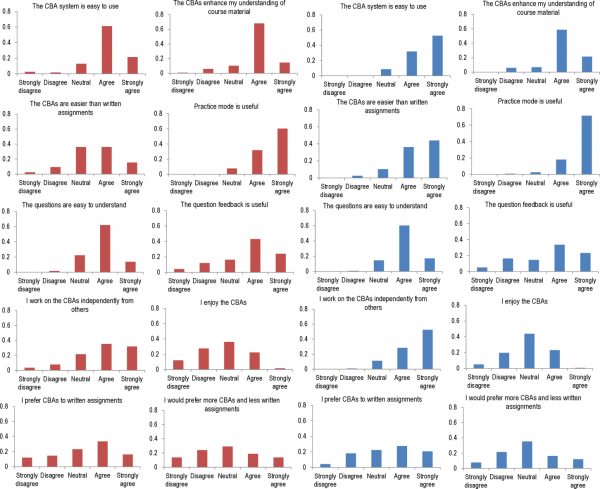

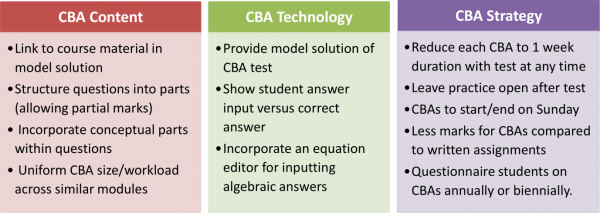

Questionnaire results from 2011/2012 (under i-assess), based on 116 respondents, are shown on the left of Figure 2. A summary of the student suggestions are shown in Figure 3.

iii. Student Feedback on CBAs (2012/2013)

From 2011/2012 to 2012/2013, the CBAs became presented through Numbas, with its modified user interface, navigation and question display (Figure 1). The following changes to the question and solution content were also made, based on student suggestions:

- A number of questions in 2011/2012 were based on general material outside the module and were anomalously challenging and unpopular (see Figure 3 (top)). These questions were removed. This also served to make the number of questions in this module’s CBAs more consistent with its sibling core modules (5-6 questions).

- The model solutions, inherited from a previous lecturer, were modified to include more explanation of concepts and mathematical steps, and follow the style of the lectures.

- Approximately half of the CBA questions were broken up into multiple parts, each requiring a separate answer, so as to enable partial marks.

The average mark of the first two CBAs[2] in 2011/2012 was 64.2% and in 2012/2013 it was 70.2%. Questionnaire results from 2012/2013 are shown in the right-hand columns of Figure 2, while the suggestions from students are summarized in Figure 3 (bottom). General student comments on CBAs, from both years, are summarized in Box 2.

Box 2: General comments on CBAs made by students from both polled years.

- A good way to get extra marks for the module/generally a good system which is easy to use.

- Very useful for revision…useful in the lead up to exams.

- Very useful for practising questions and understanding core materials.

- The worked solutions in practice mode are good when you don’t understand a topic.

- Would prefer CBAs just as a revision tool.

- Would rather do a second set of written assignments. CBAs sound like a good idea but you can’t beat doing questions with pen and paper.

- Would rather have paper assignments. Remember and learn more.

- CBAs are brilliant! I understand the course material so much better because of CBAs.

- I like the mix of CBAs and written assignments.

- Give more marks for CBAs because people prefer them to written assignments.

- I prefer written assignments to CBAs because they test your understanding of the course, but CBAs are still very useful for practicing standard questions.

- Given the value of marks from written assignments and the stress they cause compared to CBAs, why can’t we have CBAs every week instead of written assignments?

f. Analysis and Discussion

Before discussing the feedback it should be noted that the student responses referred to CBAs across all modules.

i. Student Perception of CBAs

We first describe the students’ general perception and engagement with CBAs across both years. There was a strong consensus that the CBAs were easy to use, suggesting that the user interface, navigation, etc, were already at a high level of optimization. The students found the questions, overall, easy to understand, likely due to the simple, “seen” type of questions presented.

There was a strong consensus that CBAs enhance the understanding of the course. Students commented (Box 2) that CBAs provide useful practice questions and aid in understanding the core materials. The a strong positive response for the usefulness of CBA practice mode further emphasizes the effectiveness of CBAs as a learning tool.

The usefulness of the question feedback was deemed to be, overall, slightly positive, but with a considerable spread of sentiment. Negative sentiment may have arisen over unclear model solutions and a lack of model solutions to the test (Figure 3). Although not specifically stated, the inherent lack of personal, intelligent feedback may also contribute to this.

Students somewhat favour independent working on CBAs, although considerable group work is also evident. The cause of the slight shift towards more independence is unclear.

The perception of CBAs being, on average, easier than written assignments is not surprising given the focus on “seen” questions and the extensive practice mode.

The enjoyment of CBAs was on average neutral but with some strong love/hate sentiment. CBAs were slightly preferred over written assignments but there was no net call for more CBAs. This suggests a recognition that written assignments test deeper understanding and are more satisfying. Those who would prefer more CBAs cite the ease and reduce stress of CBAs (Box 2). These mixed but net neutral responses indicate that the current combination of CBAs and written assignments provides a suitable balance.

ii. Improvements from 2011/2012 to 2012/2013

The improved front-end of Numbas eradicated many of the cosmetic complaints from i-assess. Indeed the ease-of-use rated even higher with Numbas.

CBAs were deemed slightly easier in 2012/2013, likely due to the removal of troublesome questions (the higher average mark supports this). The improvements to the MAS1142 CBA questions and solutions made no discernible improvement in the student opinion of CBA feedback. Perhaps these modifications were too conservative or too minor a change on the overall CBA experience across all modules.

The lack of technical problems in 2012/2013 confirm the enhanced stability of the Numbas package.

iii. Future Improvements

Here we discuss further ways to improve the student experience, and the efficacy of learning and assessment with CBAs, under the umbrellas of CBA technology, content and strategy.

Technical Aspects

The single most common issue raised by students across both years was the “all-or-nothing” nature of the marking, with no provision of half or method marks. This is inherent to employing a computer to mark the answer [8]. Some lecturers would argue that this is justified, since mathematics requires absolute rigour and precision, and so why shouldn’t the marking scheme demand this? However, this scheme does little for student morale and their perception of CBAs. Nor does it benefit ranking of ability if students either score zero or full marks.

It is possible to incorporate some intelligence into the electronic marking process. To deduce how and where a solution went wrong (and thereby apportion marks accordingly) one must know the common mistakes and check for these in the submitted answer, or alternatively pass the answers through a set of mathematical tests to deduce “how correct” it is. These features have already been demonstrated in the STACK CBA package [9, 12]. However this intelligence must be carefully designed and hard-coded into each question, making it labour-intensive. Also, it is not suited to all question types. A more amenable approach to circumvent the award of method marks is to structure the question into sub-parts, as discussed in Content below.

Input of algebraic answers is performed syntactically. This is itself challenging and prone to introducing errors, with the unfortunate result that a student may lose all marks despite knowing the correct answer. This could be improved by introducing a facility for the student to check the inputted answer for obvious syntax errors, as incorporated into the AIM and STACK packages [9]. This source of errors could be completely alleviated by replacing syntactic input with a clickable equation editor. This is already successfully employed in the Maple TA package [13].

An inherent artefact of using questions with randomized parameters is that astute students may be able to spot the pattern in the answer (through multiple realizations) and deduce future answers without direct solution. This possibility can be reduced by suitable question design. While this problem would be removed altogether if all questions (or at least the ones in the CBA test) were different, this requires a much greater bank of questions to be written.

Content

The most direct and amenable control over CBAs lies with the teacher, through the choice of material and design of questions and solutions. To improve on the “all-or-nothing” marking of questions, they can be divided into parts, each carrying a proportion of marks. While this may produce questions which are negatively formulaic and guided, it is also educational in demonstrating the cognitive components in solving a question. To aid in awarding method marks, one may incorporate a facility to “reveal” the solutions in the early parts, such that a student who incorrectly answered the first part may still answer the subsequent parts correctly.

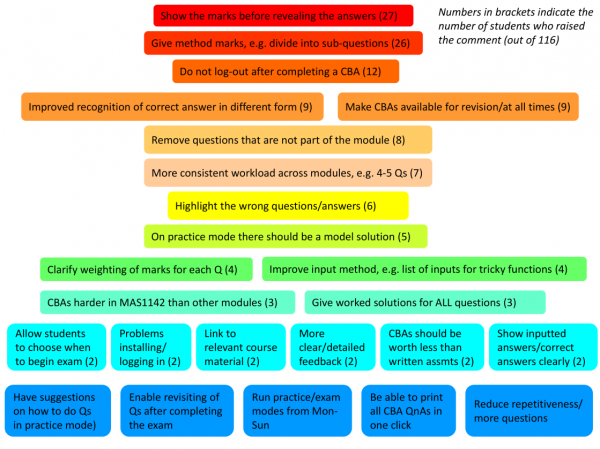

CBAs are most naturally suited to formulaic type questions which access only low, surface cognitive skills (e.g. levels 1-3 on the Sangwin taxonomy). However, testing of deeper understanding is possible with careful question design. For example, by encouraging students to analyse their answer and draw some conclusion, one may access level 4 skills. This was incorporated into some of the modified questions in the 2012/2013 CBAs, with one example being shown in Figure 4: after obtaining some algebraic solutions, the question asks for students to deduce key features of their curves. This requires careful further analysis of the solutions by the students. Furthermore, it is shown elsewhere that CBA questions can be posed to probe level 7 skills (creating an example/instance) [11].

There is a limit to how much information should be included in the model solution and one should aim to cater for the majority of students. But for those who require more guidance, it is natural to provide a link to further information. Such information might be the appropriate lecture slides, ReCap recording or section of the notes. From next year, the Numbas CBAs will be run within the University’s Blackboard VLE, enabling easy linking to the Blackboard module content. This will require regular updating of information though from year to year. Video tutorials can also be embedded within the model solution and there is a growing bank of such clips within the School [19]. These facilities would serve to improve the feedback to the students.

The amount of questions and difficulty level should be as consistent as possible across similar modules. At the moment, most CBAs in stage 1 present 4-5 questions, with an anticipated working time of 2 hours per CBA. There were mixed calls from students for more and fewer questions, which suggest that the current balance might be fair. Is the current level of difficulty suitable? Recall that CBAs were introduced as a platform for practising standard, “seen” questions (with written assignments being the domain of more challenging unseen problems). The student opinion that CBAs are slightly easier than written assignments suggest that the balance between the two is appropriate.

However, call for more questions and more variety of questions, presumably from the more motivated students, should not be overlooked and refused. A second set of CBAs could be offered, which are optional and feature a wider variety of questions for motivated students to tackle. It is uncertain whether this supplementary material, being formative, would attract enough users to warrant the effort to set them up and maintain them. Such optional CBAs should be piloted in one module to first assess their popularity and benefit.

Strategic Aspects

The most general, strategic decisions over CBA usage rests at the School level. Timing of CBAs is one example. Students complain of stress induced by concentrated hand-in deadlines, particularly in stage 1. Currently, each CBA has a 2 week cycle: 1 week practice followed by 1 week for the test. This is perhaps overly generous, and reducing each CBA to 1 week in total would allow greater flexibility for separating assignments. One could incorporate the flexibility for students to take the test at any time during the week. Related to this, a trivial but beneficial change would be to leave CBA practice mode open even after the test as a revision tool. Shifting the CBA start/end time from Friday night to Sunday night would provide students with valuable free-study time prior to the deadline.

While each CBA originally had half the weighting of written assignments, they now have the same weighting (in stage 1). Compared to written assignments, CBAs are easier, test surface knowledge rather than deep understanding, and arguably their main success is in promoting learning and not assessing it. Hence this mark balance needs to be urgently redressed. Given the advantageous nature of CBAs for learning, one might argue that they do not justify a summative status at all. However, practically speaking, enough marks should be attached to CBAs to encourage the cohort to seriously attempt them.

The comments suggest that students have a misjudgement that CBAs and written assignments are somehow equivalent in our (the School’s) eye, not helped by their uniform weighting. Some recognise the fundamental differences in these assessment strategies, but a significant amount do not, and question why they can’t simply have more CBAs. With this in mind, it is important to inform the students of the motivation for each type of assessment, why they complement each other, and what their distinct pedagogical roles are. What’s more, this project has revealed that students are keen to provide feedback on CBAs (and have a lot to say on them!), despite concerns that they are already “over-questionnaired” within modules (by end-of-module questionnaires). We should then not shy away from regularly polling their opinions of CBAs in the future.

Finally, a report on assessment would not be complete without a discussion of plagiarism. A strong merit of CBAs is that, by randomizing the parameters in each question, the opportunity for direct copying between students is eliminated. This does not rule out some form of cheating, for example, that someone else does the test, but the same is true for written assignments. There is also the possibility for cheating through hacking of the CBA system to obtain answers. To prevent this, CBA security will have to be carefully monitored.

g. Conclusions

Stage 1 undergraduates in the School of Mathematics and Statistics were polled on their experiences with computer-based assessments over two years running. These years marked the outgoing of the i-assess package and the incoming of Numbas, a package developed in-house. Students engage well with CBAs, reporting their ease-of-use and an appreciation for their presence in the course. While CBAs adequately perform their role of assessing students, their main, and perhaps underestimated, pedagogical merit lies in directly promoting learning and understanding. By incorporating a practice mode with model solutions, the CBAs strongly support and enhance learning of core material, and enable students to gain confidence in practising standard techniques.

While CBAs are a valuable tool for learning and assessment, they have major restrictions, e.g. lack of intelligent feedback, inability to assess deeper learning, and a restriction to formulaic question styles. This means that in general they are restricted to assess the lower cognitive skills in mathematics, e.g. factual recall, routine calculations and classification of objects. The ability to probe some higher skills is possible but only in limited scenarios and, as such, written assignments should remain the primarily channel for assessment of deeper understanding and higher skills. They should not replace or compete with conventional written assignments. With this jurisdiction in mind, a complimentary balance of both CBAs and written assignments, that optimizes student learning and marking load, can be struck .

The introduction of Numbas led to a marked improvement in ease-of-use reported by the students. Given the School’s technical control over the package, there is now freedom to gradually evolve Numbas so as optimize its learning and assessment effectiveness, and the student experience. The most pertinent and pressing suggestions for improvement to Numbas and CBA usage within the School, based on the findings of this investigation, are summarized in Figure 5.

At present, there is ongoing curriculum review in the School where all forms of assessment are themselves being questioned and assessed. One possible outcome may be that CBAs are removed altogether as a form of continuous assessment. In these decisions, the value of CBAs to enhance learning and understanding of core material, as evidenced here, should be not over-looked.

Appendix A: CBA Questionnaire

Bibliography

- Krantz, S., How to Teach Mathematics. 1998: American Mathematical Society.

- Numbas. Available from: http://www.ncl.ac.uk/maths/numbas/.

- Clarke, A., Designing Computer-based Learning Materials. 2001, Aldershot: Gower Publishing Ltd.

- Scheuermann, F.B., Julius, ed. The transition to computer-based assessment: new approaches to skills assessment and implications for large-scale testing. 2010, Office for Official Publications of the European Communities: Luxembourg. 211.

- Bishop, P., M. Beilby, and A. Bowman, Computer-based learning in mathematics and statistics. Computers & Education, 1992. 19(1-2): p. 131-143.

- Beevers, C.E., et al., The Calm before the storm! CAL in University Mathematics. Computers & Education, 1988. 12(1): p. 43-47.

- Harding, R.D. and D.A. Quinney, Mathwise and the UK Mathematics Courseware Consortium. Active Learning 4, 53-57 (1996), 1996: p. 53.

- Croft, A.C., et al., Experiences of using computer assisted assessment in engineering mathematics. Computers & Education, 2001. 37(1): p. 53-66.

- Sangwin, C.J. and M. Grove, STACK: addressing the needs of the “neglected learners”, in Proceedings of the WebAlt Conference 2005.

- Wikipedia entry on E-assessment. 05/03/2013; Available from: http://en.wikipedia.org/wiki/E-assessment.

- Sangwin, C.J., New opportunities for encouraging higher level mathematical learning by creative use of emerging computer aided assessment, in IJMESST 2003, 2003.

- Sangwin, C., Who uses STACK? A report on the use of the STACK CAA system, in Resources to support the learning and teaching of Mathematics, Statistics and Operational Research in Higher Education 2010, MSOR Network: Birmingham.

- MapleSoft Maple TA 9. Available from: http://www.maplesoft.com/products/mapleta/.

- WebWork Online Homework System. Available from: http://webwork.maa.org/intro.html.

- i-assess. Available from: http://www.ediplc.com/customised-assessments.asp

- Ramsden, P., Learning to Teach in Higher Education. 1992, London: Routledge.

- Smith, G., et al., Constructing mathematical examinations to assess a range of knowledge and skills. International Journal of Mathematical Education in Science and Technology, 1996. 27: p. 65.

- Bloom, B.S., et al., Taxonomy of Educational Objectives: Cognitive Domain. 1956, New York: McKay.

- School of Mathematics and Statistics Video Tutorials. Available from: http://vimeo.com/nclmaths/videos/all.